C++23 is here, and compiler developers are slowly metabolizing the standardese into something we can use. One of the main highlights of this release is a language feature called “explicit object parameters,” which not only cleans up the syntax of the typical uses of the this keyword, but allows for brand new constructs that help tie up loose ends introduced by earlier language features, deduplicate code, and help untangle template spaghetti. A creative use of the “explicit object parameter” can even implement Rust-style traits with no boilerplate! Most of the sections below will build up to why explicit object parameters (just “EOP” going forward) are useful, in case you have only a passing familiarity with C++ – experts are advised to skip ahead.

Stating the warts

C++ is not an elegant language.

class Ghost {

int peekaboo = 5;

public:

void setToEight(int peekaboo) {

peekaboo = 8; // (1)

}

void setToEightAgain() {

peekaboo = 8; // (2)

}

};Lines (1) and (2) are identical, but (1) changes the value of the function parameter, and (2) changes the value of the class member. This is because when an identifier doesn’t refer to anything in its current scope, the compiler checks if it matches a class member. This makes code ambiguous and dependent on context. To disambiguate, you can prepend this->:

this->peekaboo = 8;Or add a prefix to class members:

class Ghost {

int m_peekaboo = 5;

public:

void setToEight(int peekaboo) {

m_peekaboo = 8;

}Prefixes are aesthetically unpleasant. Using the this keyword works, but it’s still entirely optional, many people don’t like including it when it’s not required, and we didn’t eliminate the rule of implicit this-> and thus are still susceptible to bugs caused by accidentally referring to the wrong variable.

Public survey

How do other languages handle this? Let’s go back in time, and have a look at how OOP can be emulated in C:

struct Ghost {

int peekaboo;

};

void Ghost__setToEight(Ghost* this) {

this->peekaboo = 8;

}Self-explanatory, the name lookup is trivial. If we wanted to emulate function members as well, we could keep a pointer to the setToEight() function inside the struct. Let’s see about Python:

class Ghost:

peekaboo = 5

def setToEight(self):

self.peekaboo = 8Here, if a member function wants to modify the instance, it needs that self parameter. And in Rust?

struct Ghost {

peekaboo: i32,

}

impl Ghost {

fn setToEight(&mut self) {

self.peekaboo = 8;

}

}More or less the same as Python here, we just need mut because in Rust everything is const by default unless specified otherwise.

The majority of programming languages settled on making the this/self parameter explicit. It’s a little more typing, but name lookup rules become simpler and less magical, making code more readable at a glance.

You cannot escape this

C++ tries to hide the existence of this parameter, but with some more advanced features you need to be aware of its existence and reason about it anyway. One example is member function ref-qualifiers:

class Ghostbuster {

public:

void bust() &;

void bust() &&;

void bust() const &;

void bust() const &&;

};This is a famously confusing syntax. How can a function itself be const, or a reference? Why are multiple functions with the same name and argument list allowed?

The answer is that these are the qualifiers of the implicit this. If the instance itself is const or an r-value (for example, it’s a temporary that was just returned from another function by value), the name resolution chooses the function with the best matching qualifier. If you assume that this is a real parameter, it makes perfect sense why the overloads are allowed – the parameter lists are all different.

One more example. In order to make C++ more functional, you can #include <functional>. You then get a bunch of great features, like partial application:

#include <functional>

void frobnicate(int x, int y) {}

struct Spline {

void reticulate(int z, int w) {}

};

int main() {

auto frobnicateWithXOfEight = std::bind_front(&frobnicate, /* x = */ 8);

frobnicateWithXOfEight(42); // x = 8, y = 42

Spline spline;

auto reticulateWithXOfEight = std::bind_front( // *record scratch* what do we do here?std::bind_front takes an existing function and creates a functor (an objectt that acts like a function, in that it can be called with brackets), “prefilling” some of the arguments starting from the left. Can we bind a member function, though? Do we use spline.reticulate or Spline::reticulate? How does it know the instance to be called on, can we provide it later?

Spline spline;

auto reticulateWithXOfEight = std::bind_front(&Spline::reticulate, spline, 8);

reticulateWithXOfEight(42); // this = spline, z = 8, w = 42?We bind the first parameter – which is actually the implicit this. C++ hides it most of the time, but it’s still there. With EOP, we can make this far less confusing.

Check your self

Fast-forward to 2023 – the future is now, and we can do this:

class Ghost {

int peekaboo;

public:

void setToEight(this Ghost& self) {

self.peekaboo = 8;

// peekaboo = 8; // (1)

// this->peekaboo = 8; // (2)

}

};The syntax is remarkably simple – insert this before the first parameter, and it becomes the instance. You can make it Ghost const&, Ghost&&, or any other allowed qualifiers. Implicit member access like in (1) is now a compile-time error in any function where the explicit this is present, and this itself cannot be used like in (2), either.

It’s rather redundant to put in Ghost as the type every time though, it’s the only type that’s valid there anyway. Can we make it a template?

class Ghost {

int peekaboo;

public:

template<typename T>

void setToEight(this T&& self) {

self.peekaboo = 8;

}

};When the method is called, the type T gets correctly deduced to Ghost, and everything works the same. (The && qualifier is there so that the function works for any reference type, due to C++’s reference collapsing rules. The details aren’t important here.)

If you prefer, you can also use the shorthand for templated function parameters:

class Ghost {

int peekaboo;

public:

void setToEight(this auto&& self) {

self.peekaboo = 8;

}

};This is the form you’ll see most often in code that uses this feature.

With the type of this being explicit and controllable with a template, we can remove duplication of const/non-const versions of member functions, create recursive lambdas, or even pass this by value. More usage examples are available in Microsoft’s writeup of the feature. I recommend having a look at it before continuing to get a better grasp on EOP.

Superpowers

First, a quick primer on Rust traits. They are an interface that a class can implement – a named set of functionality. A trait called Meowable might require anything that implements it to have a meow() method. Traits can also provide a default implementation, which is used if the class doesn’t override it. Any function can now require its arguments to have a specific trait instead of locking down the type entirely. It’s a clean way of achieving polymorphism without the complexity cost of full-blown OOP. You can have a look at some examples in Rust docs.

At a glance, this seems similar to C++ inheritance – the trait is a base class, the required method is pure virtual, and the function argument’s type is a reference to the base class. There’s one major difference, though – Rust traits are entirely static dispatch. There are no vtables, no virtual calls, it’s all resolved at compile-time. Like templates, without the templates.

This could be achieved in C++ before with enough template magic, but the trick is in making it readable enough that it’s a net positive for your code. Thanks to EOP, this is now possible. Here’s a C++ trait:

class Doglike {

public:

auto bark(this auto&& self) -> std::string requires false;

auto wag(this auto&& self) -> std::string { return "wagwag"; }

};A trait is just a struct that uses EOP for its function members. bark() is a function that every trait user must implement, and wag() has a default implementation so implementing it is optional. The only new bit is requires false – we’ll explain that later. It seems like we’re setting up an abstract class, but virtual is nowhere to be seen.

Now, let’s “implement” this “trait”:

class Fido:

public Doglike

{

public:

auto bark(this auto&& self) -> std::string { return "bark!"; }

};We’re just inheriting from it, and implementing the required method by copypasting the signature. Let’s analyze the usage code:

Fido fido;

std::print("{}\n", fido.bark());

std::print("{}\n", fido.wag());It shouldn’t be too difficult to see why we can call wag(). The name is not found within the class but it’s found within the base class, so that one is used. With bark(), the name is found in both, so just like with normal inheritance, name resolution prefers the deriving class’s version. So far, so good. Nothing is different from standard inheritance yet, but now it’s time to invoke static polymorphism:

void barkTwice(std::derived_from<Doglike> auto dog) {

std::print("{} {}\n", dog.bark(), dog.bark());

}

int main() {

Fido fido;

barkTwice(fido);

}The X auto param syntax is a shorthand for constraining a template argument. auto dog would match any type, std::derived_from<Doglike> auto dog matches only types T where the concept std::derived_from<T, Doglike> is true – so, only classes that “implemented” the “trait”.

With inheritance, this function would accept a reference to Doglike instead. Then, to find Fido‘s overridden definition of bark(), the call would be a virtual call – the vtable of the class would be used to call the correct function. However, because this is instead a template, the argument dog is always its real type, in this case Fido. The lookup of bark() in the function is then the same as in the earlier usage code. We could change auto to auto& to accept whatever type is passed by reference instead of copying it in.

Let’s imagine what would happen if Fido never implemented bark(). At the call site, the compiler gathers a set of all possible overloads and templates that could be used, which in this case is only the declaration in Doglike. The SFINAE process starts – all variations that result in invalid code are removed from the set. requires false is a constraint that simply fails the SFINAE test immediately, so when the declaration in Doglike is removed from the set, the set is now empty. We get a compiler error – out of all the functions that can be called, none of them are valid.

If requires false was removed, the code would actually compile! The call site would be referring to a nonexistent specialization of the template, hoping for it to be implemented somewhere else. It’s not, so the linker gives us an undefined symbol error. It would be preferable to catch this at compile-time instead, so requires false is the equivalent of = 0 to make the function “pure virtual”.

Finally, you might want to wrap the concept as syntactic sugar:

template<typename T, typename U>

concept implements = std::derived_from<T, U>;

void barkTwice(implements<Doglike> auto dog) {There are always caveats

As often as C++ features come out of the oven half-baked, EOP comes surprisingly complete. It’s a shame that it can’t be used in constructors or destructors, but that doesn’t affect the “trait” construct. A small issue is that implementing a trait but not all of the required methods is not an error by itself – it only becomes one when the unimplemented method is called.

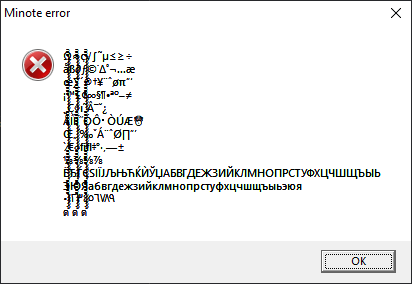

Another problem, which it shares with a lot of modern C++ features, is that every function involved here – both the trait member functions and the functions that require a trait – are now templates, thus, their definitions can’t be separated into a .cpp file. This problem is resolved by modules, where non-template functions and template functions behave largely the same, and the distinction disappears. But… there is a problem. As of the time of writing:

- The only compiler that supports EOP is MSVC.

- The only compiler that supports modules (in a usable state) is MSVC.

- MSVC doesn’t support both EOP and modules at the same time.

😔